We’ve finally received our new inference hardware! As part of this process, we’re currently migrating our operations to a brand new compute cluster. You may have noticed some speed upgrades already, but this change will improve server and network stability, as well.

Since everything is finally coming together, it is time to announce the upcoming release schedule for our coming 70 billion parameter text generation model, Llama 3 Erato.

Built with Meta Llama 3: Erato

In order to add our special sauce, we continued pre-training the Llama 3 70B base model for hundreds of billions of tokens of training data, spending more compute power than even our previous text generation model, Kayra. As always, we finetuned it on our high quality literature dataset, making it our most powerful storytelling model yet.

Llama 3 Erato will be released for Opus users next week, so get ready for the release, the wait is almost over!

Until then, we are busy migrating to the new cluster, and switching our text generation models, Kayra and Clio, to a new inference stack, which serve these unquantized models more efficiently. However, this stack does not play well with our Classifier Free Guidance (CFG) sampler, so we will need to say goodbye to CFG sampling.

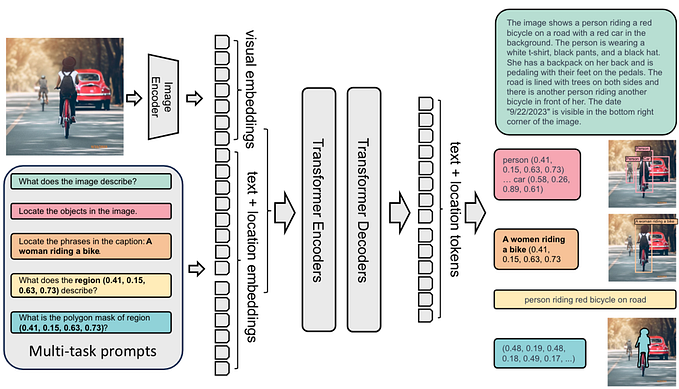

To make up for this, we are releasing two new samplers, which will also be supported for Erato: Min P and Unified Sampling:

The popular Min P sampler sets a simple threshold at a proportion of the top token’s probability, and prunes any tokens below it.

Unified Sampling is designed to replace your entire sampling chain, so you can use it alone without any other samplers. It’s based on new research, so the quality should be a minor improvement over your existing presets, while being much simpler.

To use it as intended, navigate to the Change Settings Order button, enable Unified and Randomness, and disable all the others. Then set Randomness to 1. Unified has three parameters: Linear, Quad, and Conf. Increasing Linear will prioritize high-probability tokens. Increasing Quad will delete the lowest-probability tokens. Increasing Conf will prioritize the highest-probability tokens, but only when the highest probability token is small.

To learn more, head on over to our docs.

We’re excited to see the new Presets you create and to hear how they perform on your stories.

See you next week, when you get to meet our new addition to our text generation model roster!